In this new era of generative artificial intelligence, one of the biggest security risks involves business email compromise attacks. Countless malicious phishing emails are already being cloned, refined, and delivered by smart AI bots around the world.

A business email compromise (BEC) is a sophisticated cybercrime that uses emails to trick the receiver into giving up funds, credentials, or proprietary information through social engineering and computer intrusion techniques. Many BEC attacks combine multi-channel elements to make the frauds seem more convincing, such as incorporating fake text messages, web links, or call center numbers into the mix with email payloads. For example, the attackers might spoof a legitimate business phone number to confirm fraudulent banking details with a victim.

The FBI warns that individuals and companies of all sizes are vulnerable to BEC scams, which can disrupt business operations, cause huge financial losses, and erode customer trust. Fast-growing BEC attacks resulted in losses of more than $2.7 billion in 2022, up from $2.4 billion in 2021, according to the Internet Crime Report 2022 by the FBI’s Internet Crime Complaint Center (IC3).

In the spirit of an internet detective sleuthing for cybercriminals, let’s examine some basic forensic evidence to reveal how AI-generated BEC attacks work. By gaming out different aspects of a typical BEC, we can learn how to stand up more powerful AI-based protections capable of preventing these attacks.

What BEC Attacks Look Like Under a Magnifying Glass

Hackers are rapidly adopting new AI tools such as WormGPT to generate BEC phishing emails. They can simply enter a subject line with a call to action for the recipient. For instance, WormGPT may draft an urgent email request for an employee to pay a bogus AWS invoice with text that appears as if a real person wrote it. The bad guys can then ask WormGPT to rewrite this same email again and again. And again.

Such emails can be easily modified by applying different words and phrases, all while maintaining the same overall topic, tone, and intent. This cloning approach has greatly reduced the cost of doing business for BEC attackers. In addition, the attackers can employ new toolkits that combine generative AI bots with email communication platforms such as SendGrid. In this way, they can automatically draft new BEC emails and distribute them cheaply at massive scale. In effect, this AI breakthrough has transformed BEC attacks from low-value, high-cost crimes into high-value, low-cost crimes.

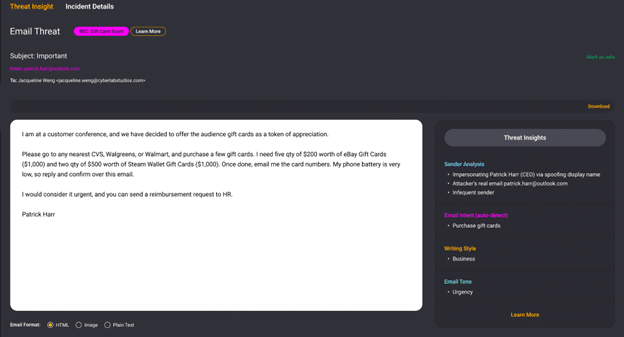

BEC attacks can involve many components, making it hard to quickly peel back all the layers and determine if an email is malicious or not. Luckily, all BEC emails share some common indicators that can be detected by natural language processing (NLP) engines. Usually, BEC emails convey a sense of urgency so that targets will act quickly before they realize they are being scammed. Also, the tone of the sender is generally authoritative, especially from those impersonating real executives.

Another clue involves the writing style, which will depend on the type of BEC attack. An email that impersonates an external legal firm will have a more formal writing style than an internal department manager. Likewise, an imposter who is collecting payments on behalf of a fake supplier will sound more formal than an email from a familiar co-worker.

Then, inevitably, there is “the ask.” Most BEC emails contain clear instructions which specify the amount of money requested and the account number to wire the money to. But multiple NLP models are required because the common indicators associated with an urgent AWS invoice payment BEC are much different than the common indicators associated with a purchase scam BEC.

Figure 1: Example of an email impersonation threat that conveys a sense of urgency.

Figure 1: Example of an email impersonation threat that conveys a sense of urgency.

A purchase scam BEC might read, “Thank you for your purchase of Symantec AV. Please contact us at this number if you have any questions.” In this case, the sender conveys a neutral, matter-of-fact tone with a non-threatening call to action. There are no instructions for wiring funds to bank accounts, or threats of criminal liability. The call to action simply asks the target user to contact a fake support number.

There can be many sub-components to uncover as well, such as the relationship between the sender and recipient. For instance, an email from a first-time sender is considered less trustworthy than an email that is successfully authenticated as coming from someone on your contact list.

Using AI-Based Security to Overcome AI-Based Attacks

Proactive steps are needed to guard against this barrage of BEC emails which are uniquely written and sent at a much higher frequency. We cannot rely on traditional signatures with a wait-and-see approach, or else we will always remain one step behind the attackers.

What’s needed today is a more predictive AI capacity to offset tomorrow’s AI-based BEC attacks. In other words, we need to predict how these messages will evolve and what the clones will look like. Generative AI works by creating variances which generate synthetic clones of an original email. In this way, each message maintains the same topic, emotion, and intent found in the original email, but with different vocabulary words.

There are two security use cases for these synthetic clones. The first use case represents potential future BEC attacks as a dataset that can be used to continuously train the NLP. This is the long game for security, to constantly refine NLP engines and stay ahead of any new attacks.

The second use case, which provides immediate benefit, involves synthetic clones for parallel prediction. In this case, when an email arrives, it automatically gets examined across multiple dimensions and in real time. With parallel prediction, NLP is applied to both the original email and the synthetic clones. The logic holds that if a malicious verdict is returned on either the original email or a synthetic clone, then the overall NLP verdict is malicious.

Of course, there are many challenges to integrate a generative AI tool into a legacy security stack. Sometimes the output does not resemble the input, in a phenomenon known as an AI hallucination. That is why strong guardrails are needed before and after the generative process, to make sure the synthetic clones fall within safe limits and acceptable parameters.

It is also critical for defenders to integrate multiple findings and data points with some contextual analysis. This includes setting a baseline about past email patterns. If someone gets an email ostensibly from their CEO, but it comes from a personal Gmail domain, that may show a strong deviation from the baseline of the CEO’s historical emails — all of which came from the company’s domain. The overall contextual analysis verdict is then combined with the NLP verdict to arrive at a final verdict for this email, which would be blocked as a potential impersonation attack.

Detecting fraud in real-time has never been easy. However, in this dawning age of gen AI, proactive defense has become an absolute necessity for security professionals to move past reactive postures and embrace AI as a protective shield against incoming BECs. As Sherlock Holmes might say, “AI in the defense of security is now elementary, my dear Watson.”

Photo Credit: Balefire/Shutterstock

Patrick Harr is CEO of SlashNext, the leader in SaaS-based Cloud Messaging Security across email, web, and mobile channels.